大语言LLM环境准备与基本测试

安装ubuntu22.04 并更换为清华源

sudo nano /etc/apt/sources.list替换文件内容为如下:

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-backports main restricted universe multiverse

# deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-security main restricted universe multiverse

# # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-security main restricted universe multiverse

deb http://security.ubuntu.com/ubuntu/ jammy-security main restricted universe multiverse

# deb-src http://security.ubuntu.com/ubuntu/ jammy-security main restricted universe multiverse

# 预发布软件源,不建议启用

# deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-proposed main restricted universe multiverse

# # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ jammy-proposed main restricted universe multiverse更换输入法为搜狗输入法

安装fcitx输入法框架

sudo apt install fcitx下载搜狗输入法安装包

cd ~/Downloads

sudo dpkg -i sogoupinyin_4.2.1.145_amd64.deb

sudo apt install libqt5qml5 libqt5quick5 libqt5quickwidgets5 qml-module-qtquick2

sudo apt install libgsettings-qt1卸载ibus输入法框架

sudo apt purge ibusMiniconda

参考链接 下载这个安装脚本,并运行

wget https://repo.anaconda.com/miniconda/Miniconda3-py310_23.1.0-1-Linux-x86_64.sh

bash Miniconda3-py310_23.1.0-1-Linux-x86_64.sh

source ~/.bashrc在需要创建环境的时候可以按需创建

conda create -n 31009text python=3.10.9

conda create -n 31006sd python=3.10.6Git

sudo apt install git如果没有科学上网,安装油猴脚本加速浏览器的github 或者其他方案

驱动

参考文章

#卸载系统自带的驱动

sudo apt purge nvidia*

sudo vi /etc/modprobe.d/blacklist.conf

在最后增加两行

blacklist nouveau

options nouveau modeset=0sudo update-initramfs -u

sudo reboot重启以后,检查nouveau是否被屏蔽,如果以下命令无输出则成功

lsmod | grep nouveau根据显卡型号下载驱动,并安装

sudo chmod a+x NVIDIA-Linux-x86_64-525.89.02.run

sudo ./NVIDIA-Linux-x86_64-525.89.02.run -no-x-check -no-nouveau-check -no-opengl-files- -no-x-check: 安装时关闭X服务;

- -no-nouveau-check: 安装时禁用nouveau;

- -no-opengl-files: 只安装驱动文件,不安装OpenGL文件, 这个无所谓

安装完成后,通过下述命令查看兼容的cuda版本,2080ti最高可以兼容12.0

nvidia-smi安装cuda

访问https://developer.nvidia.com/cuda-toolkit-archive

进行选择,然后执行相应的命令行下载并安装

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-ubuntu2204.pin

sudo mv cuda-ubuntu2204.pin /etc/apt/preferences.d/cuda-repository-pin-600

wget https://developer.download.nvidia.com/compute/cuda/12.0.0/local_installers/cuda-repo-ubuntu2204-12-0-local_12.0.0-525.60.13-1_amd64.deb

sudo dpkg -i cuda-repo-ubuntu2204-12-0-local_12.0.0-525.60.13-1_amd64.deb

sudo cp /var/cuda-repo-ubuntu2204-12-0-local/cuda-*-keyring.gpg /usr/share/keyrings/

sudo apt-get update

sudo apt-get -y install cuda验证安装完成,注意V是大写

nvcc -Vnvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2022 NVIDIA Corporation

Built on Mon_Oct_24_19:12:58_PDT_2022

Cuda compilation tools, release 12.0, V12.0.76

Build cuda_12.0.r12.0/compiler.31968024_0

!!!由于要使用量化QPTY版本,实际安装11.7.1版本,如下

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-ubuntu2204.pin

sudo mv cuda-ubuntu2204.pin /etc/apt/preferences.d/cuda-repository-pin-600

wget https://developer.download.nvidia.com/compute/cuda/11.7.1/local_installers/cuda-repo-ubuntu2204-11-7-local_11.7.1-515.65.01-1_amd64.deb

sudo dpkg -i cuda-repo-ubuntu2204-11-7-local_11.7.1-515.65.01-1_amd64.deb

sudo cp /var/cuda-repo-ubuntu2204-11-7-local/cuda-*-keyring.gpg /usr/share/keyrings/

sudo apt-get update

sudo apt-get -y install cuda安装cudnn

注册nv-developer账号

https://developer.nvidia.com/rdp/cudnn-download

下载对应版本,选择TAR压缩包,随后按照说明进行安装,实际是解压缩以及将文件移动到指定位置

Text-generation-webui

https://github.com/oobabooga/text-generation-webui

sudo apt install build-essential

# conda create -n 31009text python=3.10.9

conda env list

conda activate 31009text

# install pytorch 根据显卡不同命令不一样

pip3 install torch torchvision torchaudio

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webui

pip install -r requirements.txt

python server.pyGPTQ 安装

激活conda 的 31009text环境,

按照此分支 进行安装,安装目录为text-generation-webui 的repositories目录下,如果仍然报错,参考hf 配置

git clone https://github.com/qwopqwop200/GPTQ-for-LLaMa

cd GPTQ-for-LLaMa

pip install -r requirements.txthf的配置方案,主要是切换到了gptq的一个指定版本

git clone -n https://github.com/qwopqwop200/GPTQ-for-LLaMa gptq-safe

cd gptq-safe && git checkout 58c8ab4c7aaccc50f507fd08cce941976affe5e0由于这个方案重新命名了文件夹gpta-safe, 所以在GPTQ-Loader.py的文件中需要相应修改,增加path的位置,以便后续的运行过程中能找到modelutils 和find_layers

sys.path.insert(0, str(Path("repositories/GPTQ-for-LLaMa")))

sys.path.insert(0, str(Path("repositories/GPTQ-for-LLaMa/utils")))

sys.path.insert(0, str(Path("repositories/gptq-safe")))

import llama_inference_offload

import modelutils

from modelutils import find_layers测试加载模型

llama-13b-hf 不成功,其模型大小达40g, 报错out of memory, 因此必须用4bit量化模型

GPT4-x-alpaca-30b-4bit 不成功,在text-generation-webui中有bug

vicuna-13B-1.1-GPTQ-4bit-128g 成功

单独测试chatglm-6b ,成功,在text-generation-webui中报错,trust_remote_code问题

加载模型vicuna 4bit如下,也可以在webui中选取

python server.py --model vicuna-13B-1.1-GPTQ-4bit-128g --wbits 4 --groupsize 128 --model_type Llama加载vicuna模型

#先用delta模型去处理原始llama,生成可以直接使用的模型

python -m fastchat.model.apply_delta --base-model-path ./llama-13b-hf --target-model-path ~/TDowload/vicuna-13b --delta-path ~/TDowload/vicuna-13b-delta-v1.1/

# 此处由于需要内存超过64GB,暂时无法完成,有待后续转换原始的llama模型到hf 量化模型

#convert LLaMA to hf

python convert_llama_weights_to_hf.py --input_dir /path/to/downloaded/llama/weights --model_size 7B --output_dir ./llama-hf

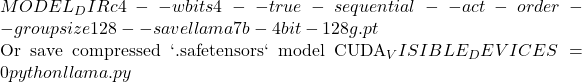

# Benchmark language generation with 4-bit LLaMA-7B:

# Save compressed model

CUDA_VISIBLE_DEVICES=0 python llama.py  {MODEL_DIR} c4 --wbits 4 --true-sequential --act-order --groupsize 128 --save_safetensors llama7b-4bit-128g.safetensors

# Benchmark generating a 2048 token sequence with the saved model

CUDA_VISIBLE_DEVICES=0 python llama.py

{MODEL_DIR} c4 --wbits 4 --true-sequential --act-order --groupsize 128 --save_safetensors llama7b-4bit-128g.safetensors

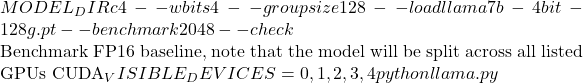

# Benchmark generating a 2048 token sequence with the saved model

CUDA_VISIBLE_DEVICES=0 python llama.py  {MODEL_DIR} c4 --benchmark 2048 --check

# model inference with the saved model

CUDA_VISIBLE_DEVICES=7 python llama_inference.py

{MODEL_DIR} c4 --benchmark 2048 --check

# model inference with the saved model

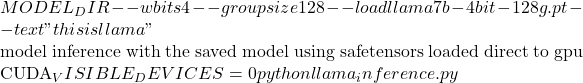

CUDA_VISIBLE_DEVICES=7 python llama_inference.py  {MODEL_DIR} --wbits 4 --groupsize 128 --load llama7b-4bit-128g.safetensors --text "this is llama" --device=0

# model inference with the saved model with offload(This is very slow. This is a simple implementation and could be improved with technologies like flexgen(https://github.com/FMInference/FlexGen).

CUDA_VISIBLE_DEVICES=0 python llama_inference_offload.py ${MODEL_DIR} --wbits 4 --groupsize 128 --load llama7b-4bit-128g.pt --text "this is llama" --pre_layer 16

It takes about 180 seconds to generate 45 tokens(5->50 tokens) on single RTX3090 based on LLaMa-65B. pre_layer is set to 50.

{MODEL_DIR} --wbits 4 --groupsize 128 --load llama7b-4bit-128g.safetensors --text "this is llama" --device=0

# model inference with the saved model with offload(This is very slow. This is a simple implementation and could be improved with technologies like flexgen(https://github.com/FMInference/FlexGen).

CUDA_VISIBLE_DEVICES=0 python llama_inference_offload.py ${MODEL_DIR} --wbits 4 --groupsize 128 --load llama7b-4bit-128g.pt --text "this is llama" --pre_layer 16

It takes about 180 seconds to generate 45 tokens(5->50 tokens) on single RTX3090 based on LLaMa-65B. pre_layer is set to 50.总结:建议直接下载已经量化好的模型

测试 MOSS 模型

注意有plugin 版本的区别

安装迅雷或者其他下载软件

用来下载huggingface.co 上的模型

安装v2raya 或者其他科学上网方法

sudo snap install v2raya

# 进行配置Langchain

在conda31009text环境下安装langchain

conda env list

conda activate 31009text

pip install langchaindata independent

referece

https://www.youtube.com/watch?v=v6sF8Ed3nTE

https://colab.research.google.com/drive/115ba3EFCT0PvyXzFNv9E18QnKiyyjsm5?usp=sharing

pip -q install git+https://github.com/huggingface/transformers

pip install -q datasets loralib sentencepiece

pip -q install bitsandbytes accelerate

pip -q install langchainTroubleshooting

常见问题一

Hi @candowu, thanks for raising this issue. This is arising, because the

tokenizerin the config on the hub points toLLaMATokenizer. However, the tokenizer in the library isLlamaTokenizer.This is likely due to the configuration files being created before the final PR was merged in.

Change the LLaMATokenizer in tokenizer_config.json into lowercase LlamaTokenizer and it works like a charm.

常见问题二

ValueError: The current `device_map` had weights offloaded to the disk. Please provide an

`offload_folder` for them.Adding this argument should resolve the error:

text-generation-webui/modules/models.py line 56

import torch

from transformers import AutoModelForCausalLM

# Will go out of RAM on Colab

checkpoint = "facebook/opt-13b"

model = AutoModelForCausalLM.from_pretrained(

checkpoint, device_map="auto", offload_folder="offload", torch_dtype=torch.float16

)问题三,moss运行中

TypeError: '<' not supported between instances of 'tuple' and 'float'

这个是由于 OutOfResources 导致返回 float值,随后不能完成比较

参考以下解决方案,采用源码重新编译和安装triton

其实就是修改一行代码,将资源不足时返回的float类型改成了tuple类型

| 删除 | return float('inf') | |

|---|---|---|

| 增加 | return (float('inf'), float('inf'), float('inf')) |

问题四: (待验证)

In the current version, we have model_class not being an Auto class for (TF) ImageClassificationPipelineTests, and we get test failure TypeError: ('Keyword argument not understood:', 'trust_remote_code')

https://github.com/huggingface/transformers/runs/7421505300?check_suite_focus=true

Adding TFAutoModelForImageClassification in src/transformers/pipelines/__init__.py will fix the issue.